Summary: Researchers developed an AI model of the fruit fly brain to understand how vision guides behavior. By genetically silencing specific visual neurons and observing changes in behavior, they trained the AI to predict neural activity and behavior accurately.

Their findings reveal that multiple neuron combinations, rather than single types, process visual data in a complex “population code.” This breakthrough paves the way for future research into the human visual system and related disorders.

Key Facts:

- CSHL scientists created an AI model of the fruit fly brain to study vision-guided behavior.

- The AI predicts neural activity by analyzing changes in behavior after silencing specific visual neurons.

- The research revealed a complex “population code” where multiple neuron combinations process visual data.

Source: CSHL

We’ve been told, “The eyes are the window to the soul.” Well, windows work two ways. Our eyes are also our windows to the world. What we see and how we see it help determine how we move through the world. In other words, our vision helps guide our actions, including social behaviors.

Now, a young Cold Spring Harbor Laboratory (CSHL) scientist has uncovered a major clue into how this works. He did it by building a special AI model of the common fruit fly brain.

CSHL Assistant Professor Benjamin Cowley and his team honed their AI model through a technique they developed called “knockout training.” First, they recorded a male fruit fly’s courtship behavior—chasing and singing to a female.

Next, they genetically silenced specific types of visual neurons in the male fly and trained their AI to detect any changes in behavior. By repeating this process with many different visual neuron types, they were able to get the AI to accurately predict how the real fruit fly would act in response to any sight of the female.

“We can actually predict neural activity computationally and ask how specific neurons contribute to behavior,” Cowley says. “This is something we couldn’t do before.”

With their new AI, Cowley’s team discovered that the fruit fly brain uses a “population code” to process visual data. Instead of one neuron type linking each visual feature to one action, as previously assumed, many combinations of neurons were needed to sculpt behavior.

A chart of these neural pathways looks like an incredibly complex subway map and will take years to decipher. Still, it gets us where we need to go. It enables Cowley’s AI to predict how a real-life fruit fly will behave when presented with visual stimuli.

Does this mean AI could someday predict human behavior? Not so fast. Fruit fly brains contain about 100,000 neurons. The human brain has almost 100 billion.

“This is what it’s like for the fruit fly. You can imagine what our visual system is like, ” says Cowley, referring to the subway map.

Still, Cowley hopes his AI model will someday help us decode the computations underlying the human visual system.

“This is going to be decades of work. But if we can figure this out, we’re ahead of the game,” says Cowley. “By learning [fly] computations, we can build a better artificial visual system. More importantly, we’re going to understand disorders of the visual system in much better detail.”

How much better? You’ll have to see it to believe it.

About this AI and neuroscience research news

Author: Sara Giarnieri

Source: CSHL

Contact:Sara Giarnieri – CSHL

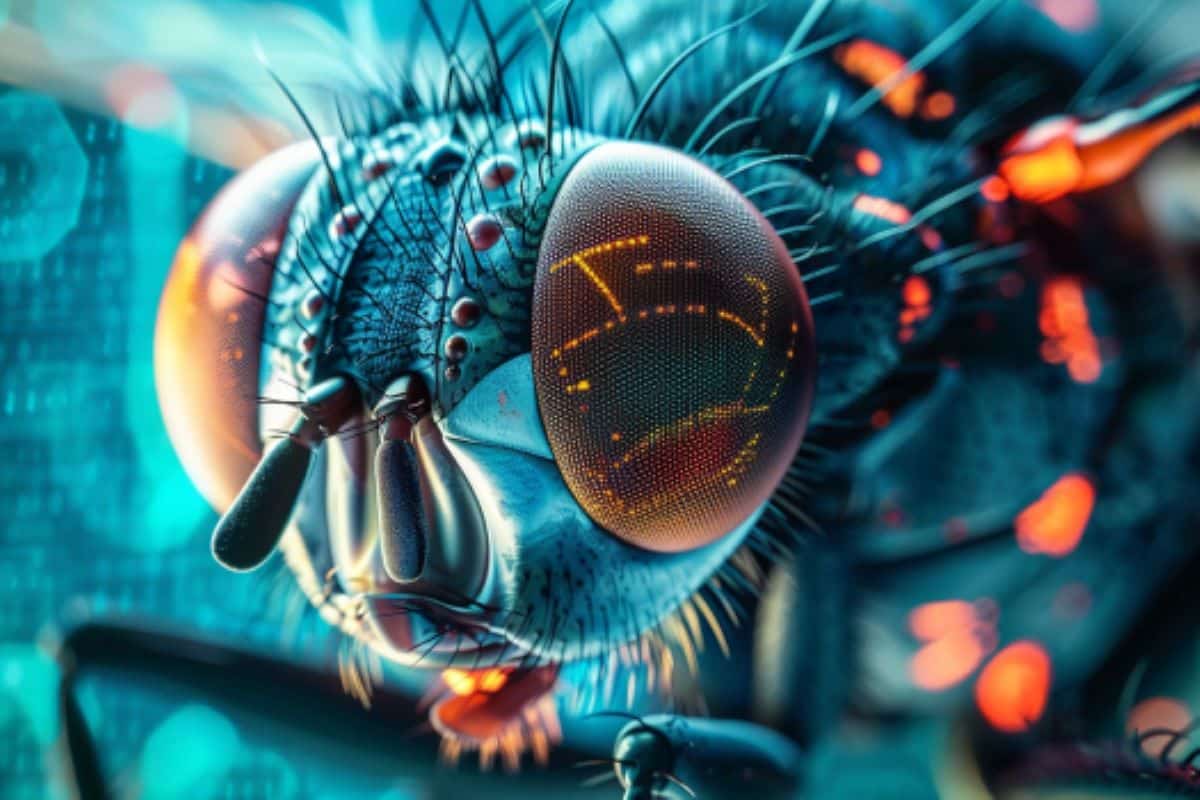

Image: The image is credited to Neuroscience News

Original Research: Open access.

“Mapping model units to visual neurons reveals population code for social behaviour” by Benjamin Cowley et al. Nature

Abstract

Mapping model units to visual neurons reveals population code for social behaviour

The rich variety of behaviours observed in animals arises through the interplay between sensory processing and motor control. To understand these sensorimotor transformations, it is useful to build models that predict not only neural responses to sensory input but also how each neuron causally contributes to behaviour.

Here we demonstrate a novel modelling approach to identify a one-to-one mapping between internal units in a deep neural network and real neurons by predicting the behavioural changes that arise from systematic perturbations of more than a dozen neuronal cell types.

A key ingredient that we introduce is ‘knockout training’, which involves perturbing the network during training to match the perturbations of the real neurons during behavioural experiments. We apply this approach to model the sensorimotor transformations of Drosophila melanogaster males during a complex, visually guided social behaviour.

The visual projection neurons at the interface between the optic lobe and central brain form a set of discrete channels, and prior work indicates that each channel encodes a specific visual feature to drive a particular behaviour.

Our model reaches a different conclusion: combinations of visual projection neurons, including those involved in non-social behaviours, drive male interactions with the female, forming a rich population code for behaviour.

Overall, our framework consolidates behavioural effects elicited from various neural perturbations into a single, unified model, providing a map from stimulus to neuronal cell type to behaviour, and enabling future incorporation of wiring diagrams of the brain into the model.

Dr. Thomas Hughes is a UK-based scientist and science communicator who makes complex topics accessible to readers. His articles explore breakthroughs in various scientific disciplines, from space exploration to cutting-edge research.